Apple's Technology Transitions

posted on

The computer industry can often move fast, with new technologies coming and going with dizzying speeds. Some lucky technologies are well thought out enough to stick around for decades, but ultimately all become outdated and replaced by newer technology.

The problem is once you introduce a new technology, be it hardware or software, developers will start to use it in their apps, and people and businesses will come to rely on those apps. This means that when a technology comes to the end of its useful life, it isn't an easy choice to just replace it. You could just leave the technology there, but even that isn't an easy choice, as it requires resources to maintain, and can even prevent you from making improvements elsewhere.

Working out the correct balance between backwards compatibility and forward momentum is incredibly difficult. Microsoft is renowned for taking this to the extreme, supporting old (and sometimes ancient) software as much as possible. Apple takes a different approach, switching technologies much more frequently, and breaking compatibility as a result. The most recent example of this is the dropping of support for 32-bit applications in macOS 10.15, which has caused a fair bit of controversy.

A lot of the controversy comes down to people not understanding the how or the why of these transitions, and why Apple ultimately drops the old technology. So I thought it would be useful to explore Apple's history of transitions and try to explain some of the reasons for this latest one in a way everyone can understand.

Apple's Past Transitions

Apple's transitions can largely be put into 2 groups: CPU transitions and API transitions.

CPU transitions

CPUs (Central Processing Units) are the core of any computer. At their most basic, CPUs take a list of instructions and run through them. These instructions can involve fetching data from your computer's memory, adding two numbers together, comparing two values, and much more.

The instructions a CPU can execute are collectively called its Instruction Set. If you build a piece of software for a particular Instruction Set, then in theory your software will run on any CPU that uses that Instruction Set, regardless of who made it. Conversely, this means that software built for one Instruction Set won't run on a CPU with a different Instruction Set.

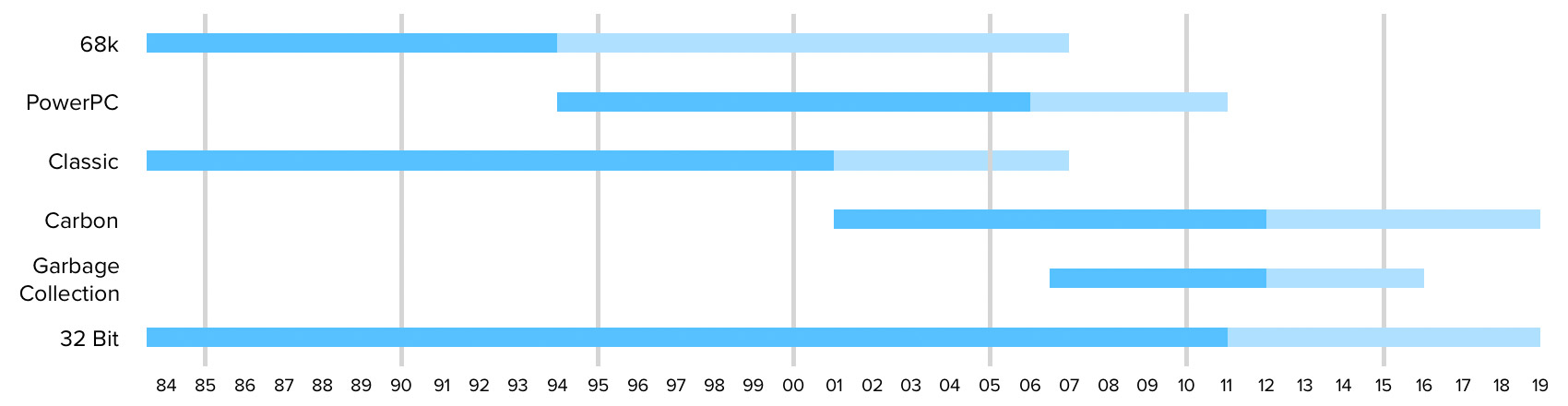

Apple has switched Instructions Sets multiple times over its history.

68k to PowerPC (1994)

The first for the Mac was switching from the Motorola 68k series of processors to PowerPC processors in 1994. In the early 90s, the Mac was starting to lag behind other computers, especially Windows PCs and their Intel processors. They were also encountering issues with Motorola. Their new 88k series of processors was not well received and they were having supply issues with the 68k series.

Apple decided to look elsewhere and ended up working with IBM to produce a version of their POWER processors that was suitable for desktop and laptop computers. They also brought Motorola in, forming what would be known as the AIM alliance. Together they produced the first PowerPC processor.

The Mac already had a large collection of existing software built for the 68k series of software, so Apple turned to emulation technology to ensure this software would run on their new PowerPC Macs. Emulation software effectively simulates some physical hardware in software. It does usually lead to a performance loss, as you can no longer effectively utilise the hardware, but it ensures that software will continue to run.

This emulation layer worked incredibly well, and enabled Apple to continue to support software written for 68k until 2007, when Mac OS X 10.5 dropped support for the Classic environment (which will be covered later on).

PowerPC to Intel (2006)

When PowerPC was first introduced it was a very powerful CPU, often beating out the competition from Intel. This lasted through the rest of the 90s, but at the turn of the millennium Apple encountered further problems. The PowerPC G4 was being produced by Motorola, but its performance had stagnated and it struggled to get beyond 1.7GHz. It was around this time that Apple started looking at the Intel processors that were regularly beating them.

PowerPC got a short reprieve when IBM introduce the PowerPC G5, a 64 bit chip with a large performance bump over the G4. This allowed Apple to produce the much loved PowerMac G5 and retake the performance crown. Unfortunately, similar problems cropped up again a few years later. IBM wasn't able to get the G5 to reach 3GHz, which Intel and AMD were doing regularly. Even worse, the power consumption and heat output of the G5 meant it would be impossible to put in their laptops. So in 2005 Apple bit the bullet and announced it would be transitioning its entire lineup to Intel.

Much like with transition from 68k, Apple needed a way for PowerPC software to run on their new Intel Macs. This time they licensed a technology called QuickTransit, that dynamically translated a CPU instruction from one Instruction Set to another, and used it to build their Rosetta feature. This allowed PowerPC software to continue to run, until the feature was dropped in 2011 with the release of Mac OS X 10.7.

Intel to ARM

The transition from Intel to the ARM architecture is an interesting one, as it has largely only affected Apple to date. When first creating the iPhone, Apple had a choice of what to base the operating system off. Some wanted to base it off the iPod's software, which already ran on ARM. However, the faction that won out were those who wanted to port Mac OS X to ARM, and it formed the basis of what we now know as iOS.

As there was no need to port existing software over, and no legacy hardware to support, Apple was able to make this transition with relative ease. However, the transition may not yet have been completed. As Apple's A-series of CPUs gets more powerful, they are starting to compete with Intel's offerings, surpassing them in both performance and energy efficiency at the low end. If this trend continues it is likely that a transition to the ARM architecture (at least at for low end Macs) will be the next major CPU transition Apple embarks on.

Fat Binaries

One key aspect of these transitions was Apple's use of Fat Binaries. While emulation and translation allows old software to run on new hardware without the developer needing to change anything, there will still be many people using old hardware who would like to keep using newer software, even after it natively supports new hardware.

The most basic way of doing this would be for a developer to ship two versions of an app, one that runs on the old hardware and one that runs on the new. Unfortunately this requires developers manage all these different versions, and requires users to ensure they get the correct version. And if the user upgrades their hardware, they need to re-install all their software.

Fat Binaries provide a more elegant way. They include multiple versions of the executable code in a single app. For example, a single app download could contain native code for both PowerPC and Intel. This means the exact same app could be run on a PowerPC Mac and an Intel Mac, and run at native speeds on both. Apple applied this technology through all their CPU transitions, including the 32 to 64 bit transition (as we'll see later)

API Transitions

APIs (Application Programming Interfaces) are groups of code provided by platform vendors to allow developers to write software. They handle a wide range of things, from reading and writing files to fetching data over a network to mathematical functions to drawing images and UI elements on screen. It is common for these APIs to evolve over time, with new APIs being added and old ones being deprecated (which is an indication that developers should no longer use it, and that it might disappear at some point).

However, occasionally an API can be completely replaced. These usually require re-writing large swathes of code in apps, and can even require rethinking how an app works. There are 3 big changes that pertain to the Mac:

Classic to OS X

When Apple introduced Mac OS X, it was the biggest shift in software on almost any major platform. Everything was changed from top to bottom as Apple merged the NeXTStep OS it had acquired in 1996 with Mac OS. These changes were so large that pretty much every existing piece of Mac software would not run on Mac OS X without significant changes.

In order to solve this, Apple introduced the Classic environment to Mac OS X, which virtualised Mac OS 9, the last version of the "Classic" Mac OS. Virtualisation is somewhat similar to emulation, however it does not simulate hardware that doesn't exist in your computer. Instead, it effectively segments your computer, allowing you to run multiple operating systems at the same time. The operating systems both run natively on the hardware, but they are sharing the CPU, RAM, storage, etc.

When starting a classic app on Mac OS X, you would see a window appear that showed Mac OS 9 booting. Once it had completed, the app would launch. You could move the window around as with any native Mac OS X window, though it would appear distinctly Mac OS 9, and would be limited to the functionality Mac OS 9 provided. Unfortunately Mac OS 9 did not run on Intel CPUs, so it was not supported on the first Intel Macs released in 2006. It was dropped completely in 2007 with the release of Mac OS X 10.5.

Carbon to Cocoa

When Apple merged NeXTStep and Mac OS into Mac OS X, they also merged the methods for building software. To do so required providing an easy path for old Mac apps to migrate, as well as old NeXTStep apps. The result were two competing APIs: Cocoa and Carbon.

Cocoa was based off the NeXTStep APIs. These APIs used the Objective-C language and have a rich history, being used to build software such as the first web browser, Macromedia FreeHand, and the level editors for games such as Doom and Quake. These APIs were mostly brought over as-is, though a few features were changed to reflect the Mac OS aspects of the OS that were introduced.

On the other hand, Carbon was based off the Mac Toolbox APIs from Mac OS, which used the C language. This allowed a much simpler way to port classic Mac software to Mac OS X, and was used to port software such as Microsoft Office and Adobe Photoshop.

Initially, it seemed the plan was for the two platforms to co-exist, but over time more and more new OS features required Cocoa. The beginning of the end for Carbon began with the introduction of 64 bit applications. While Cocoa became 64 bit, Carbon did not. Carbon was eventually deprecated in 2012 and was removed as part of macOS 10.15

Garbage Collection

This last API transition is a bit of a quirk for Apple. Normally, when a new technology is released, it is the old technology that gets removed. Objective-C Garbage Collection lasted less than a decade before it was removed, while the technology it aimed to replace lives on.

Garbage Collection (GC) is a way for software to manage RAM. Traditionally, when a piece of software wanted to use some RAM it had to "allocate" a chunk of RAM. Once it was finished with that RAM it then had to "deallocate" it, to free it up for the rest of the system to use. This is a highly error prone method that can lead to crashes and ballooning memory use if not done perfectly. GC tries to automate this for developers, keeping track of memory, detecting when some memory is no longer necessary, and periodically cleaning it up.

Apple introduced Garbage Collection for Objective-C in 2007 with Mac OS X 10.5. This was quite an achievement, as Objective-C is an extension to the C programming language, which doesn't lend itself well to GC. Unfortunately, GC has downsides. By periodically cleaning up memory, it can cause momentary stutters in software that are outside of the developer's control. While it keeps memory from ballooning out of control due to bugs, it does require more memory to run than manually managed code. And the Objective-C GC did not support C-based APIs, which meant the memory bugs mentioned above were still very likely to occur.

These all presented a problem for the iPhone with its limited resources, so Garbage Collection never made it to iOS. Instead, Apple introduced Automated Reference Counting (ARC). Rather than keeping track of memory as the application runs like GC, ARC determines when some memory needs to be allocated and deallocated when the software is compiled. This side steps the increased memory usage and periodic stuttering of GC. It ultimately gave the best of both worlds, while also allowing support for some C APIs.

Unfortunately this meant that the Garbage Collector was obsolete, so Apple deprecated it in 2012 with Mac OS X 10.8, just 5 years after being introduced. It was finally removed with macOS 10.12 in 2016. Coincidentally this is the first transition where a piece of software I wrote was rendered unusable as I had stopped developing it before I could transition it to ARC.

When Apple Did Both At Once

One thing you'll note about these transitions is Apple did one at a time. They either changed the CPU or the APIs, but never both at once. However, there is one instance of Apple doing both at once, and it's actually the first major transition they underwent: going from the Apple II to the Macintosh.

The Apple II was one of the first successful personal computers. First developed in 1977, the Apple II line continued to be developed and sold until 1993, 9 years after the introduction of the Macintosh. All Apple IIs use a 6500 type of CPU, first developed by MOS Technology. It also ran Apple DOS, and later ProDOS, as its operating system. These differences prevented software from the Apple II from being run on the Mac, which partially contributed to the prolonged success of the Apple II.

Eventually Apple did discontinue the Apple II, after sales dropped well below that of the Mac. However, Apple extended the life of Apple II software in a somewhat unique way, at least for Apple. They introduced the Apple IIe expansion card for the Mac. This effectively fit Apple II hardware onto an expansion card, and coupled with emulation software allowed Apple II software to be run on the Mac, at least until 1996 when the card was discontinued.

64 Bits

The transition that I want to talk about most though is the transition from 32 bit to 64 bit, as the last stage of this transition is what prompted this post. This is fundamentally another CPU transition, but is slightly different to other Instruction Set transitions. Rather than determining what instructions can be run, this change affects how big a chunk of data the CPU can work on in a single operation. For example, a 32 bit CPU can work on up to 32 zeros and ones in a single instruction. If it wants to work on more data, it needs to split it up into separate 32 bit chunks.

Crucially, this also affects how much RAM your system can have. RAM is effectively a grid of bytes (each byte containing 8 zeros and ones) that can be accessed in an order (hence the name Random Access Memory). To access one of these bytes you need an address, which is just a number. Crucially, the size of this number is limited by the bit size the processor can use. On a 32 bit system this number is 232 or 4,294,967,296. So a 32 bit process can access up to 4.29 billion bytes of RAM, or 4GB.

When 32 bit was introduced, this wasn't a problem as even a few MB of RAM was considered to be the realm of super computers. But by the turn of the millennium we were fast approaching the 4GB limit, so work began on bringing 64 bit to personal computers. It can be hard to comprehend how big a 64 bit number is, as the maximum number it can hold is 264, or 18.4 quintillion. To help appreciate how big this number is, imaging counting to 4.29 billion, the maximum number you can hold in 32 bits, saying 1 number every second without sleeping, eating, or drinking. Doing this would take you 136 years. If you continued to count to 18.4 quintillion at the same rate, it would take you 584.9 billion years, or around 42 times the age of the universe. Needless to say, it's unlikely we'll run into this problem with RAM again any time soon.

Apple's 64 Bit Transition

Apple's first 64 bit computer was the PowerMac G5, released in 2003. It released special builds of Mac OS X 10.2.7 and 10.2.8 to run on these machines, but it didn't support software actually using the 64 bit capabilities of the processor.

Apple's transition to 64 bit was done gradually over several releases of Mac OS X, between 2003 and 2007. This was helped by the fact that the G5 (and later, 64 bit Intel CPUs) can still run 32 bit code natively with little to no performance loss. To understand how they transitioned we need to take a brief detour into how Mac OS X is structured.

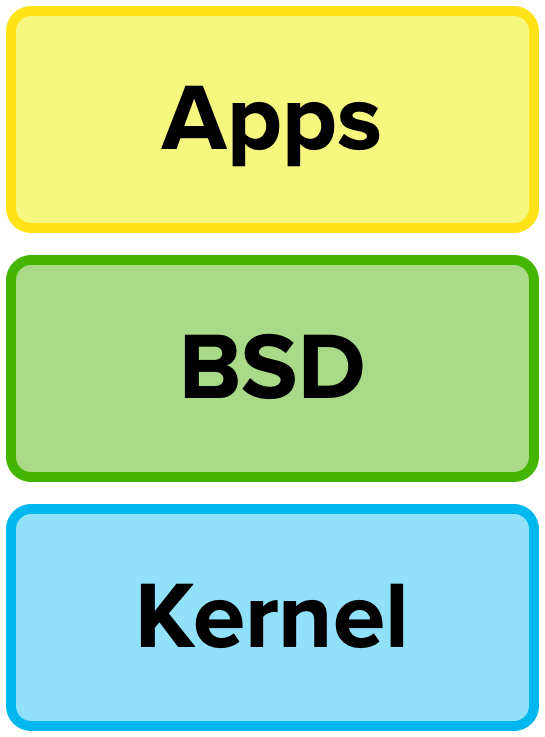

At a massively simplified scale, there are 3 key levels to Mac OS: the Kernel, the BSD layer, and the App layer.

- The Kernel is the core of the OS, managing the fine details of things like memory management and access to storage, the network, and peripherals.

- The BSD layer is a set of UNIX tools and APIs that don't require a user interface to use. If you use Terminal at all, you have used tools in this layer.

- The App layer is where the Cocoa and Carbon APIs mentioned earlier reside. All the apps you run that have a UI run in this layer.

Apple decided to migrate each of these layers in subsequent OS releases, easing the transition to 64 bit for both developers and users.

With Mac OS X 10.3 (released in 2003), Apple migrated the Kernel to 64 bit, along with some performance-sensitive maths APIs. This meant that a 64 bit system could use more than 4GB of RAM, and developers could write software that utilised some of the 64 bit capabilities of the CPU, albeit a rather limited set.

With Mac OS X 10.4 (released in 2005), Apple migrated the BSD layer to 64 bit. This meant that individual processes on the computer could access more than 4GB of RAM (while 10.3 allowed the overall system to access more than 4GB of RAM, each process was still limited to just 4GB each). Developers could now use 64 bit more extensively, as the BSD layer contains many APIs for disk access, networking, image processing, etc. However, they had to run these separately to the UI portion of their app, which added some complexity.

Finally, with Mac OS X 10.5 (released in 2007), Apple migrated the Cocoa APIs to 64 bit. This meant that developers could create a fully 64 bit app without any compromises. Much like with the transition to Intel, this was achieved using Fat Binaries, which meant that between 2007 and 2011 many apps (and the operating system itself) actually contained 4 versions, one each for 32 bit PowerPC, 64 bit PowerPC, 32 bit Intel, and 64 bit Intel.

A combination of this gradual approach and Fat Binaries meant the migration was relatively seamless for users. It was a bit more complicated for developers, but arguably much easier than the many other transitions they had to face over the previous decade. Apple finally dropped support for 32 bit Macs in 2011, 4 years after shipping their last 32 bit hardware, though support for running 32 bit software remained until 2019.

How Microsoft Went 64 Bit

At this point it's useful to compare this approach to how Microsoft approached the 64 bit transition. Microsoft released Windows XP Professional x64 Edition in 2005. Unlike Mac OS X, this was a different OS version that required you to re-install Windows.

While many 32 bit apps ran just fine (thanks 64 bit CPUs running 32 bit software natively), it did allow Microsoft a rare opportunity to drop support for some software. 64 bit versions of Windows required new device drivers, and support for older 16 bit software was dropped. However, their full set of 32 bit APIs were maintained.

Since then, Microsoft have released 32 and 64 bit editions of every release of Windows. This, coupled with the long lasting 32 bit APIs, has meant that a lot of software has remained 32 bit only, even as the bulk of computers today are sold with 64 bit Windows installed. This fact is actually partially responsible for the issues with dropping 32 bit on the Mac. The Intel switch made it much easier for developers, especially game developers, to port their software to the Mac. But as many of these developer's focus was primarily on Windows, many kept their software 32 bit-only.

Why Drop 32 Bit?

Dropping support for 32 bit software is only partially to do with 32 bits itself. Unsurprisingly, shipping both 32 and 64 bit versions as a single unit takes up more disk space. The 32 bit system libraries took up over 500MB on macOS 10.14. They also use up a lot of RAM.

Every app running on your machine needs to access some subset of the system APIs. Rather than loading these into memory separately for each app, the OS will load them once and share them with all the apps. When you have multiple apps running, this saves huge amounts of RAM. The problem is the 32 and 64 bit versions of these APIs have to be loaded separately. Now macOS is smart enough not to load these into memory until needed, but they remain there once they have been loaded. This means that if you launch a single 32 bit app, even for just a second, you will have 32 bit system APIs taking up some of your memory until you restart the system.

iOS suffered from the exact same problem (unsurprising given the shared foundation with macOS). Unfortunately iOS devices don't have the same storage or RAM capacity that a Mac does, so this proved a much more critical issue. Even though Apple only introduced the first 64 bit iPhone in 2013 with the 5S, they dropped support for 32 bit software in 2017 with iOS 11, barely 4 years later.

While this saving of disk space and RAM usage certainly benefits the Mac, there are arguably more important reasons to Apple for dropping 32 bit on the Mac. They don't actually have much to do with 32 bit itself, but more with decisions that were made in 2007 when 64 bit was finalised.

The Objective-C Runtime

Objective-C is the main programming language for Cocoa. While Swift has taken off in recent years, the vast majority of the Cocoa APIs are Objective-C. Objective-C is a heavily runtime-based language. A runtime is some code that sets up and controls the environment in which an app runs. Some languages have very small runtimes that do little after launching the app. Others, like Objective-C, interact with the runtime throughout the entire time the app is running.

When Apple introduced 64 bit with Mac OS X 10.5, they also introduced Objective-C 2.0. Part of this was a new and improved runtime, designed to fix problems with the old runtime. Unfortunately, these fixes were not compatible with existing apps, so they made the decision to only make this runtime available in 64 bit. However, this meant the (now) legacy runtime would have to stick around as long as 32 bit apps existed.

One of the problems this new runtime fixed is quite an important one: fragile ivars. This will take a bit of explaining, but hopefully by the end of it you'll begin to appreciate how dropping 32 bit apps, and by extension the legacy Objective-C runtime, will help Apple improve the Mac going forward. I will begin by explaining some basic programming concepts, so feel free to skip the next few paragraphs if you are already a programmer.

Objective-C is an Object Oriented Programming Language. This means that the fundamental building block developers use are Objects. An Object contains a combination of data, and functions to act upon that data. Each piece of data can be anything from numbers to text, or even other objects. In Objective-C, these pieces of data are called Instance Variables, or ivars for short.

In the legacy runtime, ivars are laid out in a list when you compile your application. In order to access an ivar the runtime needs to know the offset from the start of the object. In the example below you can see we have name, age, and parent ivars. The numbers on the left are the offset from the start. name is offset by 0 as it is at the start of the list. age is offset by 8, as the Text data above it requires 8 bytes to store it. The parent ivar is offset by a further 4 (the number of bytes required to store a Number) making its offset 12.

Person

0 name: Text

8 age: Number

12 parent: Person

The next concept in Object Oriented Programming is the idea of inheritance. One object may inherit from another, taking all its ivars and functions and adding some more. Lets imagine that Apple provides us with the Person object as part of their APIs, but we also want to store whether or not the person owns any pets. We can create a new object that inherits from Person and extends it. It would look like this

PetOwningPerson

0 name: Text

8 age: Number

12 parent: Person

20 ownsFish: Boolean

21 ownsCats: Boolean

22 ownsDogs: Boolean

You can see that the ivars we added have been attached to the end. Now the problem comes when Apple wants to update the Person object as these offsets are fixed. Lets say they add a hasChildren ivar. In the legacy runtime this is what would happen

Person

0 name: Text

8 age: Number

12 parent: Person

20 hasChildren: Boolean

PetOwningPerson

0 name: Text

8 age: Number

12 parent: Person

20 ownsFish: Boolean

21 ownsCats: Boolean

22 ownsDogs: Boolean

Suddenly our PetOwningPerson objects doesn't work as both the hasChildren and ownsFish ivars are at the same offset. The new Objective-C runtime has the capability of shifting these offsets if it detects a conflict like this, which would give us the following

Person

0 name: Text

8 age: Number

12 parent: Person

20 hasChildren: Boolean

PetOwningPerson

0 name: Text

8 age: Number

12 parent: Person

20 hasChildren: Boolean

21 ownsFish: Boolean

22 ownsCats: Boolean

23 ownsDogs: Boolean

The behaviour of the legacy runtime effectively means that Apple can never update their existing objects with new ivars without breaking existing apps. In reality they have found ways around this but they prove very difficult to do, which reduces the time they could spend on new features and bug fixes. Indeed, the difficulty of having to support the legacy runtime has very likely contributed to some APIs on iOS (which has only ever used the new runtime) not making it back to the Mac.

Note that the numbers and data types used here are just simplified examples, not actual values or types. For an example using actual values you would find in Objective-C, see this post by Greg Parker which served as reference for my post.

Other Reasons

While the fragile ivar problem is a big part of the desire to drop 32 bit, it's representative of a bigger issue Apple has been hoping to fix. They have a lot of legacy cruft in their OS. The Carbon APIs have been deprecated for many years, having never been migrated to 64 bit, but they still need to exist for 32 bit apps. Some of those APIs can date back to the original Mac. There are many other APIs that were deprecated with 64 bit in a similar situation with many workarounds in their code to continue supporting them.

The problem with these APIs is that as fewer and fewer developers use them, there are fewer and fewer people to notice any bugs, and in particular any potential security flaws. There are also fewer resources inside Apple to invest in maintaining these APIs to fix any such flaws.

The potential switch to ARM also plays into this, as none of these older technologies have ever existed on ARM. Now, Apple could have held off on dropping 32 bit until ARM forced their hand, it would certainly have made it easier for people to understand the reasons. However, it would have meant holding macOS back for even longer.

Backwards compatibility ultimately has a cost. It's a cost in maintaining older technologies. It's a cost in slowing down newer technologies. It's a cost in increased surface area for attacks. There is no right answer as to how much you should incur those costs as a developer. Microsoft has gone opted to suck up the costs and provide extensive backwards compatibility, seeing the benefits it brings as more valuable than these costs. Apple takes a more middle ground approach, maintaining backwards compatibility to a point, but eventually always favouring periodically cleaning out dated technology to keep them moving forward.

Hopefully this has given you a more in depth look at how Apple has managed transitioning technologies and backwards compatibility over the years. While it can seem that Apple doesn't care about backwards compatibility at all, they spend a lot of time weighing up the pros and cons before dropping support for anything. It's also interesting in how much effort they do put into backward compatibility given the large sweeping changes they have undergone the past 30 years, even if that compatibility is only ever temporary.